Dobrodošli u eru AI-akceleracije za Mainframe

28. listopada 2025.

New Skill within the FERIT Mainframe Academy: COBOL PROGRAMMING

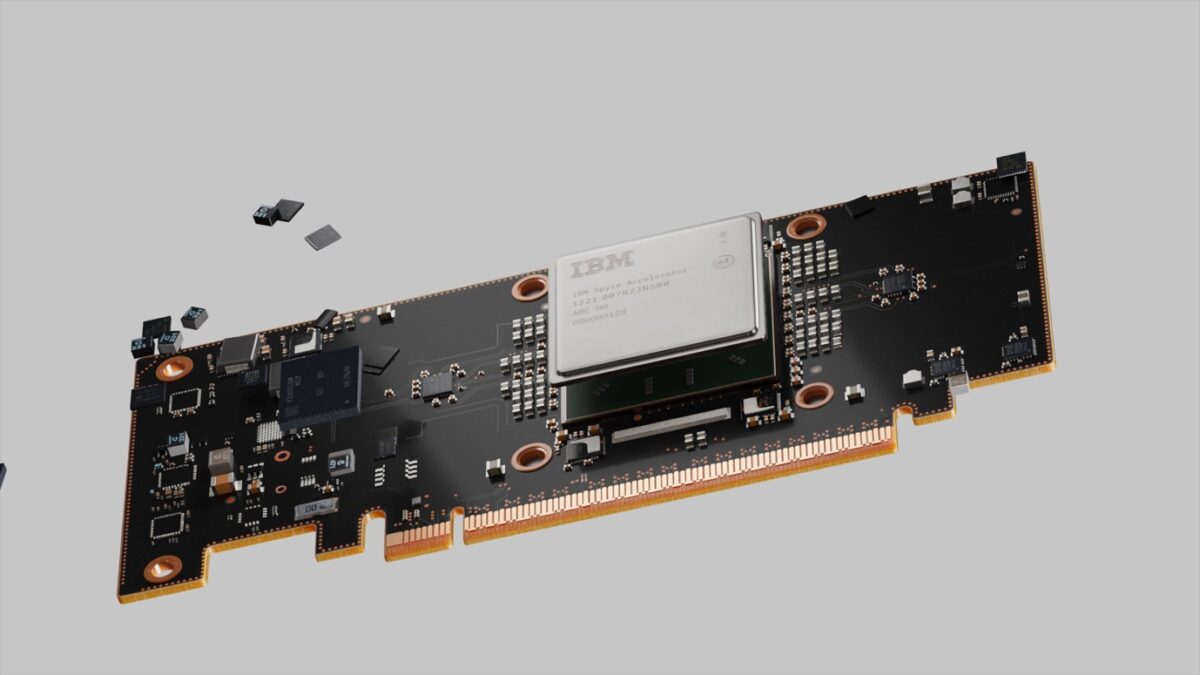

10. veljače 2026.In a world where generative artificial intelligence and AI agents are transforming how enterprises operate, IBM introduces a major leap forward for large-scale computing systems with its new accelerator card — the IBM Spyre Accelerator. Designed for the IBM Z and IBM LinuxONE platforms, Spyre enables AI workloads to run directly on the mainframe itself — without compromising security, resilience, or latency.

Why does this matter for the mainframe?

1. AI where the data lives

Traditionally, AI models are hosted on separate servers or in the cloud, requiring data to be moved outside the main system. With IBM Spyre, AI inference can be executed in place — directly within the mainframe, with minimal latency and maximum security. This means data never leaves the protected environment, reducing risk while enabling near real-time responsiveness.

2. Unmatched security and operational continuity

IBM Z and LinuxONE are already renowned for their exceptional levels of reliability and security — including encryption at rest and in transit, Trusted Execution Environments, and more. IBM Spyre is designed to fit seamlessly into this framework, delivering low latency, high throughput, and support for mission-critical workloads. For organizations in banking, finance, healthcare, and other regulated industries, it enables the integration of AI without sacrificing the hallmarks that define these systems: reliability and security.

3. Scalability and efficiency

- The IBM Spyre Accelerator is built using 5 nm technology and features 32 accelerator cores, with the option to cluster up to 48 cards in a single IBM Z or LinuxONE system.

- For the LinuxONE Emperor 5, this translates to up to 450 billion inferences per day with sub-1 ms performance in fraud-detection models.

- This enables a unified infrastructure capable of handling traditional transactions, data analytics, and generative AI workloads — all on the same machine, without relying on external resources.

4. Built for generative and agent-based AI

IBM Spyre is more than just a faster inference engine. It’s designed for modern AI paradigms — LLMs (encoder, decoder, and encoder-decoder architectures), agent-based AI (autonomous agents, chatbots, automated processes), and multimodal analysis. This marks a new era: the mainframe becomes an active AI hub, not merely a backend for legacy transactions. With this, IBM z17 and LinuxONE Emperor 5 emerge as the first enterprise systems fully conceived and equipped for the AI era.

What does this mean for users in Croatia and the region?

For organizations currently using — or considering — IBM Z or LinuxONE platforms (or those planning modernization), this represents an opportunity to:

- Preserve existing infrastructure and investments in mainframe technology, while integrating AI capabilities previously available only in the cloud or on separate GPU/TPU clusters.

- Reduce architectural complexity — eliminating the need to move data outside the main system, saving time, operational costs, and minimizing security risks.

- Meet regulatory requirements (GDPR, financial oversight, healthcare data protection) with infrastructure that already complies with the highest security standards.

- Enable collaboration across academia, research centers, and industry partners to experiment with generative and agent-based AI directly on the mainframe platform — unlocking opportunities for innovation, education, and local ecosystem growth.

Announcements Related to the IBM Spyre Accelerator

- On October 7, 2025, IBM announced the commercial availability of the Spyre Accelerator, effective October 28, for the IBM z17 and LinuxONE 5 systems. IBM Newsroom

- The accelerator was developed to support generative AI (LLMs) and agent-based AI scenarios, while maintaining the high levels of reliability, security, and performance expected in enterprise environments. IBM Announcements

- For LinuxONE, Spyre is available as an additional PCIe card featuring 32 accelerator cores, 25.6 billion transistors, and 128 GB of LPDDR5 memory. It is designed to enable AI workloads to run where the data resides — directly on the main system. LinuxONE news